FourCastNet¶

开始训练、评估前,请先下载数据集。

# 风速预训练模型评估

python train_pretrain.py mode=eval EVAL.pretrained_model_path=https://paddle-org.bj.bcebos.com/paddlescience/models/fourcastnet/pretrain.pdparams

# 风速微调模型评估

python train_finetune.py mode=eval EVAL.pretrained_model_path=https://paddle-org.bj.bcebos.com/paddlescience/models/fourcastnet/finetune.pdparams

# 降水量模型评估

python train_precip.py mode=eval EVAL.pretrained_model_path=https://paddle-org.bj.bcebos.com/paddlescience/models/fourcastnet/precip.pdparams WIND_MODEL_PATH=https://paddle-org.bj.bcebos.com/paddlescience/models/fourcastnet/finetune.pdparams

| 模型 | 变量名称 | ACC/RMSE(6h) | ACC/RMSE(30h) | ACC/RMSE(60h) | ACC/RMSE(120h) | ACC/RMSE(192h) |

|---|---|---|---|---|---|---|

| 风速模型 | U10 | 0.991/0.567 | 0.963/1.130 | 0.891/1.930 | 0.645/3.438 | 0.371/4.915 |

| 模型 | 变量名称 | ACC/RMSE(6h) | ACC/RMSE(12h) | ACC/RMSE(24h) | ACC/RMSE(36h) |

|---|---|---|---|---|---|

| 降水量模型 | TP | 0.808/1.390 | 0.760/1.540 | 0.668/1.690 | 0.590/1.920 |

1. 背景简介¶

在天气预报任务中,有基于物理信息驱动和数据驱动两种方法实现天气预报。基于物理信息驱动的方法,往往依赖物理方程,通过建模大气变量之间的物理关系实现天气预报。例如在 IFS 模型中,使用了分布在 50 多个垂直高度上共 150 多个大气变量实现天气的预测。基于数据驱动的方法不依赖物理方程,但是需要大量的训练数据,一般将神经网络看作一个黑盒结构,训练网络学习输入数据与输出数据之间的函数关系,实现给定输入条件下对于输出数据的预测。FourCastNet是一种基于数据驱动方法的气象预报算法,它使用自适应傅里叶神经算子(AFNO)进行训练和预测。该算法专注于预测两大气象变量:距离地球表面10米处的风速和6小时总降水量,以对极端天气、自然灾害等进行预警。相比于 IFS 模型,它仅仅使用了 5 个垂直高度上共 20 个大气变量,具有大气变量输入个数少,推理理速度快的特点。

2. 模型原理¶

本章节仅对 FourCastNet 的模型原理进行简单地介绍,详细的理论推导请阅读 FourCastNet: A Global Data-driven High-resolution Weather Model using Adaptive Fourier Neural Operators。

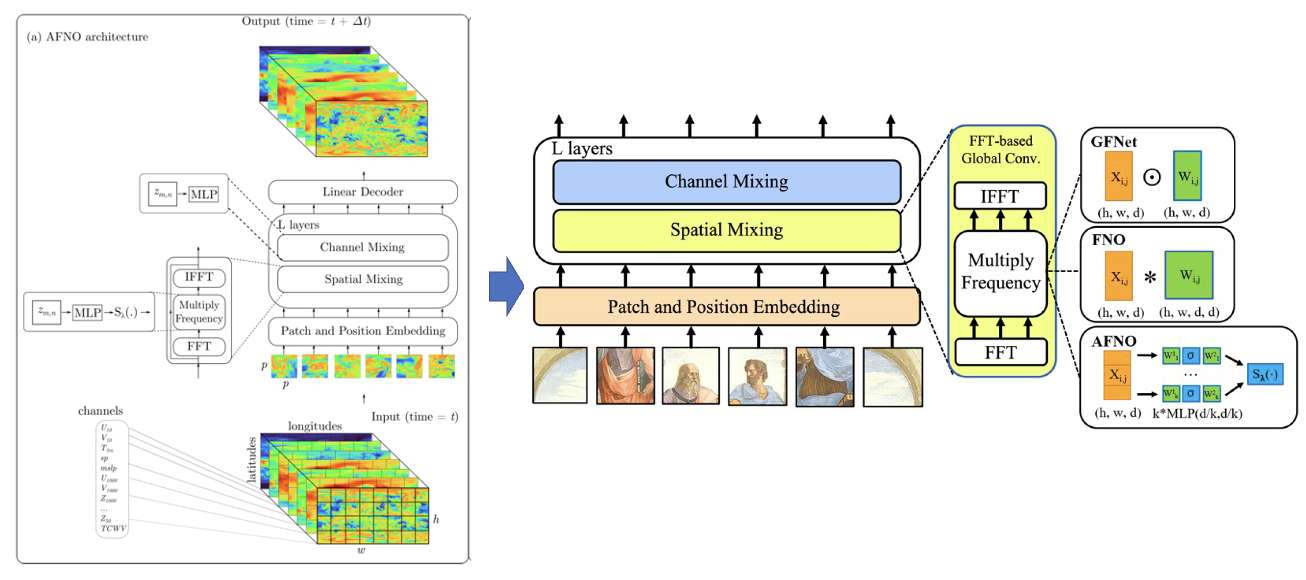

FourCastNet 的网络模型使用了 AFNO 网络,该网络此前常用于图像分割任务。这个网络通过 FNO 弥补了 ViT 网络的缺点,使用傅立叶变换完成不同 token 信息交互,显著减少了高分辨率下 ViT 中 self-attention 的计算量。关于 AFNO、FNO、VIT 的相关原理也请阅读对应论文。

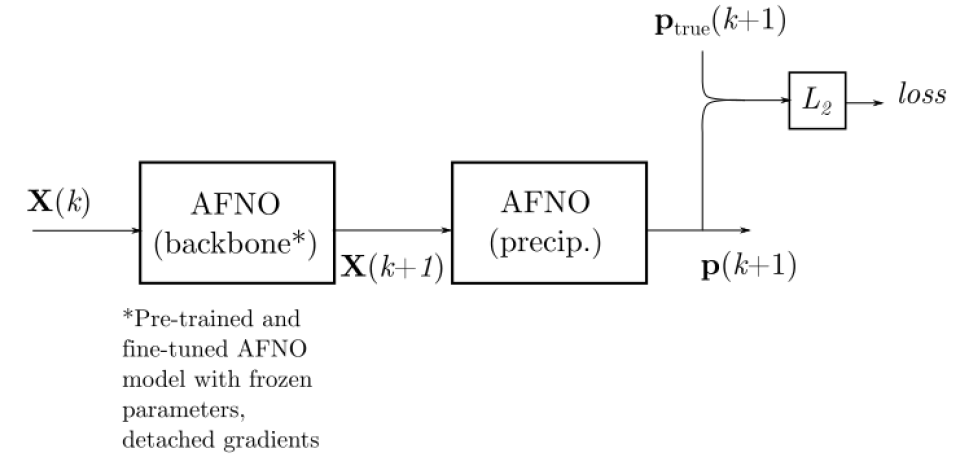

模型的总体结构如图所示:

FourCastNet论文中训练了风速模型和降水量模型,接下来将介绍这两个模型的训练、推理过程。

2.1 风速模型的训练、推理过程¶

模型的训练过程主要分为两个步骤:模型预训练、模型微调。

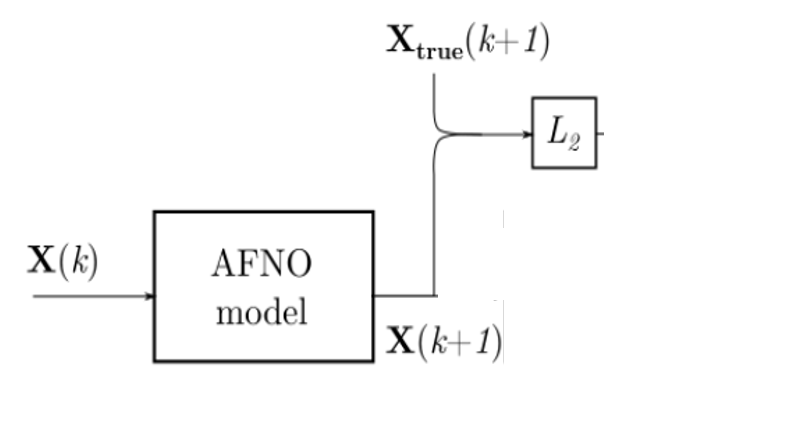

模型预训练阶段是基于随机初始化的网络权重对模型进行训练,如下图所示,其中 \(X(k)\) 表示第 \(k\) 时刻的大气数据,\(X(k+1)\) 表示第 \(k+1\) 时刻模型预测的大气数据,\(X_{true}(k+1)\) 表示第 \(k+1\) 时刻的真实大气数据。最后网络模型预测的输出和真值计算 L2 损失函数。

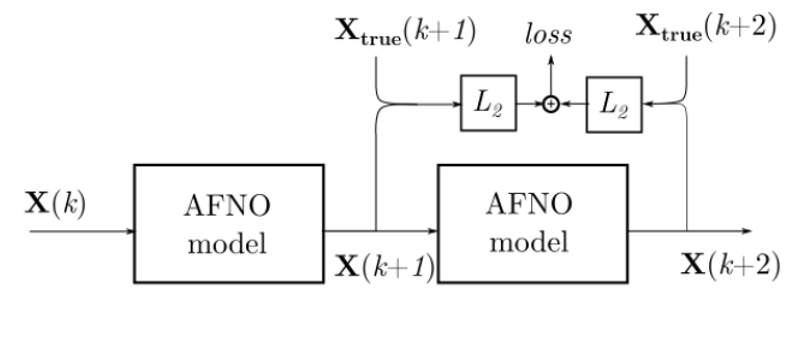

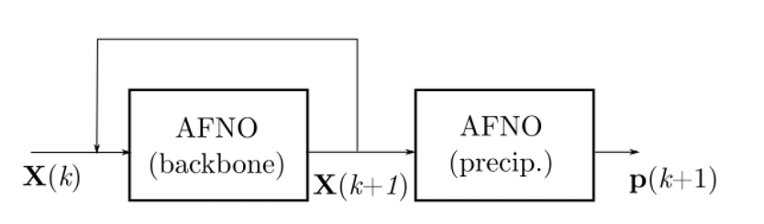

模型训练的第二个阶段是模型微调,这个阶段的训练主要是为了提高模型在中长期天气预报的精度。具体地,当模型输入 \(k\) 时刻的数据,预测了 \(k+1\) 时刻的数据后,再将其重新作为输入预测 \(k+2\) 时刻的数据,以连续预测两个时刻的训练方式,提高模型长时预测能力。

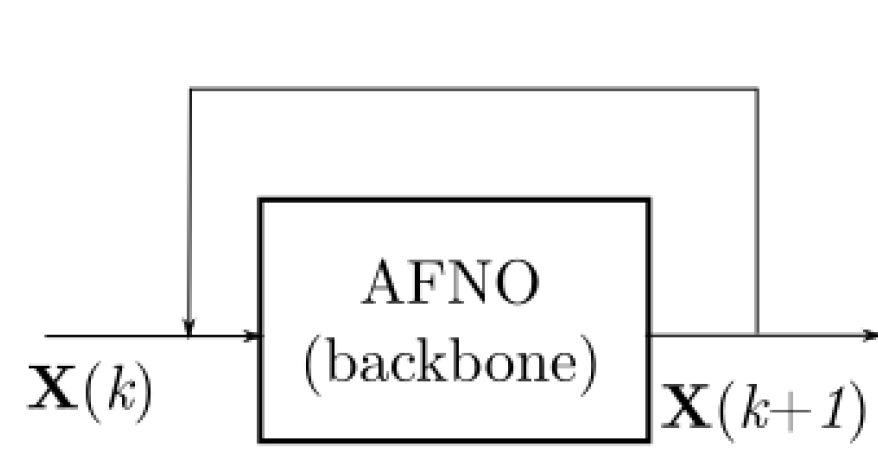

在推理阶段,给定 \(k\) 时刻的数据,可以通过不断迭代,得到 \(k+1\)、\(k+2\)、\(k+3\) 等时刻的预测结果。

2.2 降水量模型的训练、推理过程¶

降水量模型的训练依赖于风速模型,如下图所示,使用 \(k\) 时刻的大气变量数据 \(X(k)\) 输入训练好的风速模型,得到预测的 \(k+1\) 时刻的大气变量数据 \(X(k+1)\)。降水量模型以 \(X(k+1)\) 为输入,输出为 \(k+1\) 时刻的降水量预测结果 \(p(k+1)\)。模型训练时 \(p(k+1)\) 与真值数据 \(p_{true}(k+1)\) 计算 L2 损失函数约束网络训练。

需要注意的是在降水量模型的训练过程中,风速模型的参数处于冻结状态,不参与优化器参数更新过程。

在推理阶段,给定 \(k\) 时刻的数据,可以通过不断迭代,利用风速模型得到 \(k+1\)、\(k+2\)、\(k+3\) 等时刻的大气变量预测结果,作为降水量模型的输入,预测对应时刻的降水量。

3. 风速模型实现¶

接下来开始讲解如何基于 PaddleScience 代码,实现 FourCastNet 风速模型的训练与推理。关于该案例中的其余细节请参考 API文档。

Info

由于完整复现需要 5+TB 的存储空间和 64 卡的训练资源,因此如果仅仅是为了学习 FourCastNet 的算法原理,建议对一小部分训练数据集进行训练,以减小学习成本。

3.1 数据集介绍¶

数据集采用了 FourCastNet 中处理好的 ERA5 数据集。该数据集的分辨率大小为 0.25 度,每个变量的数据尺寸为 \(720 \times 1440\),其中单个数据点代表的实际距离为 30km 左右。FourCastNet 使用了 1979-2018 年的数据,根据年份划分为了训练集、验证集、测试集,划分结果如下:

| 数据集 | 年份 |

|---|---|

| 训练集 | 1979-2015 |

| 验证集 | 2016-2017 |

| 测试集 | 2018 |

该数据集可以从此处下载。

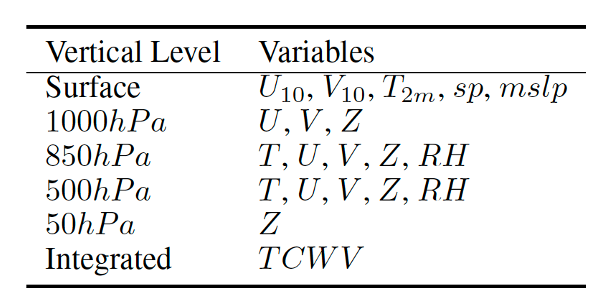

模型训练使用了分布在 5 个压力层上的 20 个大气变量,如下表所示,

其中 \(T\)、\(U\)、\(V\) 、\(Z\)、\(RH\) 分别代表指定垂直高度上的温度、纬向风速、经向风速、位势和相对湿度;\(U_{10}\)、\(V_{10}\)、\(T_{2m}\) 则代表距离地面 10 米的纬向风速、经向风速和距离地面 2 米的温度。\(sp\) 代表地面气压,\(mslp\) 代表平均海平面气压。\(TCWV\) 代表整层气柱水汽总量。

对每天 24 个小时的数据间隔 6 小时采样,得到 0.00h/6.00h/12.00h/18.00h 时刻全球 20 个大气变量的数据,使用这样的数据进行模型的训练与推理。即输入0.00h 时刻的 20 个大气变量的数据,模型输出预测得到的 6.00h 时刻的 20 个大气变量的数据。

3.2 模型预训练¶

首先展示代码中定义的各个参数变量,每个参数的具体含义会在下面使用到时进行解释。

3.2.1 约束构建¶

本案例基于数据驱动的方法求解问题,因此需要使用 PaddleScience 内置的 SupervisedConstraint 构建监督约束。在定义约束之前,需要首先指定监督约束中用于数据加载的各个参数,首先介绍数据预处理部分,代码如下:

数据预处理部分总共包含 3 个预处理方法,分别是:

SqueezeData: 对训练数据的维度进行压缩,如果输入数据的维度为 4,则将第 0 维和第 1 维的数据压缩到一起,最终将输入数据的维度变换为 3。CropData: 从训练数据中裁剪指定位置的数据。因为 ERA5 数据集中的原始数据尺寸为 \(721 \times 1440\),本案例根据原始论文设置,将训练数据裁剪为 \(720 \times 1440\)。Normalize: 根据训练数据集上的均值、方差对数据进行归一化处理。

由于完整复现 FourCastNet 需要 5TB+ 的存储空间和 64 卡的 GPU 资源,需要的存储资源比较多,因此有以下两种训练方式(实验证明两种训练方式的损失函数收敛曲线基本一致,当存储资源比较有限时,可以使用方式 b)。

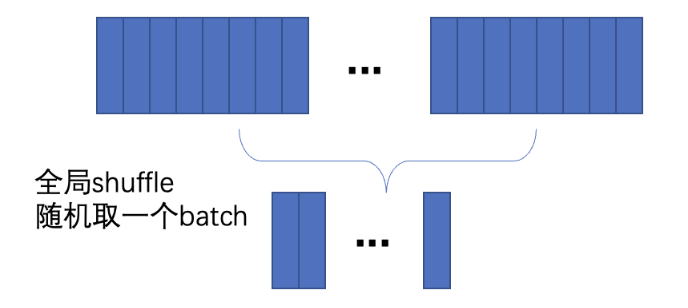

方式 a: 当存储资源充足时,可以不对数据进行划分,每个节点都有一份完整5TB+的训练数据,然后直接启动训练程序进行训练,此时每个节点上的数据随机抽取自完整训练数据。本方式的训练数据的加载是使用全局 shuffle 的方式进行,如下图所示。

这种方式下,数据加载的代码如下:

其中,"dataset" 字段定义了使用的 Dataset 类名为 ERA5Dataset,"sampler" 字段定义了使用的 Sampler 类名为 BatchSampler,设置的 batch_size 为 1,num_works 为 8。

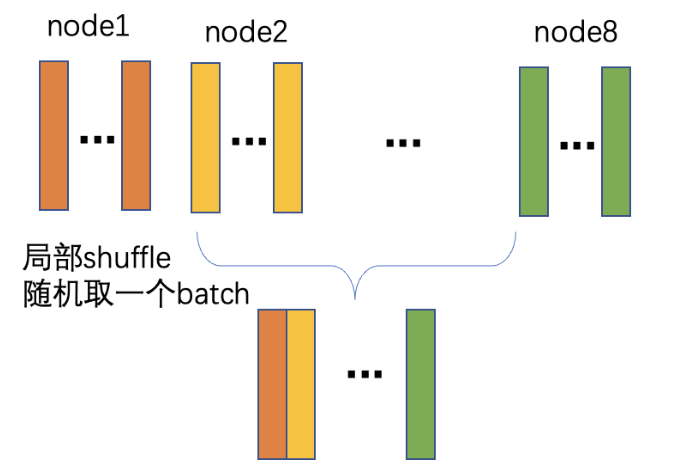

方式 b:在存储资源有限时,需要将数据集均匀切分至每个节点上,本案例提供了随机采样数据的程序,可以执行 ppsci/fourcastnet/sample_data.py,可以根据需要进行修改。本案例默认使用方式 a, 因此使用方式 b 进行模型训练时需要手动将 USE_SAMPLED_DATA 设置为 True。本方式的训练数据的加载是使用局部 shuffle 的方式进行,如下图所示,首先将训练数据平均切分至 8 个节点上,训练时每个节点的数据随机抽取自被切分到的数据上,在这一情况下,每个节点需要约 1.2TB 的存储空间,相比于方式 a,方式 b 大大减小了对存储空间的依赖。

这种方式下,数据加载的代码如下:

其中,"dataset" 字段定义了使用的 Dataset 类名为 ERA5SampledDataset,"sampler" 字段定义了使用的 Sampler 类名为 DistributedBatchSampler,设置的 batch_size 为 1,num_works 为 8。

当不需要完整复现 FourCastNet 时,直接使用本案例的默认设置(方式 a)即可,

定义监督约束的代码如下:

| examples/fourcastnet/train_pretrain.py | |

|---|---|

SupervisedConstraint 的第一个参数是数据的加载方式,这里使用上文中定义的 train_dataloader_cfg;

第二个参数是损失函数的定义,这里使用 L2RelLoss;

第三个参数是约束条件的名字,方便后续对其索引。此处命名为 "Sup"。

3.2.2 模型构建¶

在该案例中,风速模型基于 AFNONet 网络模型,用 PaddleScience 代码表示如下:

| examples/fourcastnet/train_pretrain.py | |

|---|---|

网络模型的参数通过配置文件进行设置如下:

| examples/fourcastnet/conf/fourcastnet_pretrain.yaml | |

|---|---|

其中,input_keys 和 output_keys 分别代表网络模型输入、输出变量的名称。

3.2.3 学习率与优化器构建¶

本案例中使用的学习率方法为 Cosine,学习率大小设置为 5e-4。优化器使用 Adam,用 PaddleScience 代码表示如下:

| examples/fourcastnet/train_pretrain.py | |

|---|---|

3.2.4 评估器构建¶

本案例训练过程中会按照一定的训练轮数间隔,使用验证集评估当前模型的训练情况,需要使用 SupervisedValidator 构建评估器。代码如下:

SupervisedValidator 评估器与 SupervisedConstraint 比较相似,不同的是评估器需要设置评价指标 metric,在这里使用了 3 个评价指标分别是 MAE、LatitudeWeightedRMSE 和 LatitudeWeightedACC。

3.2.5 模型训练与评估¶

完成上述设置之后,只需要将上述实例化的对象按顺序传递给 ppsci.solver.Solver,然后启动训练、评估。

3.3 模型微调¶

上文介绍了如何对风速模型进行预训练,在本节中将介绍如何利用预训练的模型进行微调。因为风速模型预训练的步骤与微调的步骤基本相似,因此本节在两者的重复部分不再介绍,而仅仅介绍模型微调特有的部分。首先将代码中定义的各个参数变量展示如下,每个参数的具体含义会在下面使用到时进行解释。

微调模型的程序新增了 num_timestamps 参数,用于控制模型微调训练时迭代的时间步的个数。这个参数首先会在数据加载的设置中用到,用于设置数据集产生的真值的时间步大小,代码如下:

num_timestamps 参数通过配置文件进行设置,如下:

| examples/fourcastnet/conf/fourcastnet_finetune.yaml | |

|---|---|

另外,与预训练不同的是,微调的模型构建也需要设置 num_timestamps 参数,用于控制模型输出的预测结果的时间步大小,代码如下:

| examples/fourcastnet/train_finetune.py | |

|---|---|

训练微调模型的程序中增加了在测试集上评估模型性能的代码和可视化代码,接下来将对这两部分进行详细介绍。

3.3.1 测试集上评估模型¶

根据论文中的设置,在测试集上进行模型评估时,num_timestamps 通过配置文件设置的为 32,相邻的两个测试样本的间隔为 8。

| examples/fourcastnet/conf/fourcastnet_finetune.yaml | |

|---|---|

构建模型的代码为:

| examples/fourcastnet/train_finetune.py | |

|---|---|

构建评估器的代码为:

3.3.2 可视化器构建¶

风速模型使用自回归的方式进行推理,需要首先设置模型推理的输入数据,代码如下:

| examples/fourcastnet/train_finetune.py | |

|---|---|

以上的代码中会根据设置的时间参数 DATE_STRINGS 读取对应的数据用于模型的输入,另外 get_vis_datas 函数内还读取了对应时刻的真值数据,这些数据也将可视化出来,方便与模型的预测结果进行对比。

由于模型对风速的纬向和经向分开预测,因此需要把这两个方向上的风速合成为真正的风速,代码如下:

最后,构建可视化器的代码如下:

以上构建好的模型、评估器、可视化器将会传递给 ppsci.solver.Solver 用于在测试集上评估性能和进行可视化。

4. 降水量模型实现¶

首先展示代码中定义的各个参数变量,每个参数的具体含义会在下面使用到时进行解释。

4.1 约束构建¶

本案例基于数据驱动的方法求解问题,因此需要使用 PaddleScience 内置的 SupervisedConstraint 构建监督约束。在定义约束之前,需要首先指定监督约束中用于数据加载的各个参数,首先介绍数据预处理部分,代码如下:

数据预处理部分总共包含 4 个预处理方法,分别是:

SqueezeData: 对训练数据的维度进行压缩,如果输入数据的维度为 4,则将第 0 维和第 1 维的数据压缩到一起,最终将输入数据的维度变换为 3。CropData: 从训练数据中裁剪指定位置的数据。因为 ERA5 数据集中的原始数据尺寸为 \(721 \times 1440\),本案例根据原始论文设置,将训练数据尺寸裁剪为 \(720 \times 1440\)。Normalize: 根据训练数据集上的均值、方差对数据进行归一化处理,这里通过apply_keys字段设置了该预处理方法仅仅应用到输入数据上。Log1p: 将数据映射到对数空间,这里通过apply_keys字段设置了该预处理方法仅仅应用到真值数据上。

数据加载的代码如下:

其中,"dataset" 字段定义了使用的 Dataset 类名为 ERA5Dataset,"sampler" 字段定义了使用的 Sampler 类名为 BatchSampler,设置的 batch_size 为 1,num_works 为 8。

定义监督约束的代码如下:

| examples/fourcastnet/train_precip.py | |

|---|---|

SupervisedConstraint 的第一个参数是数据的加载方式,这里使用上文中定义的 train_dataloader_cfg;

第二个参数是损失函数的定义,这里使用 L2RelLoss;

第三个参数是约束条件的名字,方便后续对其索引。此处命名为 "Sup"。

4.2 模型构建¶

在该案例中,需要首先定义风速模型的网络结构并加载训练好的参数,然后定义降水量模型,用 PaddleScience 代码表示如下:

| examples/fourcastnet/train_precip.py | |

|---|---|

定义模型的参数通过配置进行设置,如下:

| examples/fourcastnet/conf/fourcastnet_precip.yaml | |

|---|---|

其中,input_keys 和 output_keys 分别代表网络模型输入、输出变量的名称。

4.3 学习率与优化器构建¶

本案例中使用的学习率方法为 Cosine,学习率大小设置为 2.5e-4。优化器使用 Adam,用 PaddleScience 代码表示如下:

| examples/fourcastnet/train_precip.py | |

|---|---|

4.4 评估器构建¶

本案例训练过程中会按照一定的训练轮数间隔,使用验证集评估当前模型的训练情况,需要使用 SupervisedValidator 构建评估器。代码如下:

SupervisedValidator 评估器与 SupervisedConstraint 比较相似,不同的是评估器需要设置评价指标 metric,在这里使用了 3 个评价指标分别是 MAE、LatitudeWeightedRMSE 和 LatitudeWeightedACC。

4.5 模型训练与评估¶

完成上述设置之后,只需要将上述实例化的对象按顺序传递给 ppsci.solver.Solver,然后启动训练、评估。

4.6 测试集上评估模型¶

根据论文中的设置,在测试集上进行模型评估时,num_timestamps 设置为 6,相邻的两个测试样本的间隔为 8。

构建模型的代码为:

构建评估器的代码为:

4.7 可视化器构建¶

降水量模型使用自回归的方式进行推理,需要首先设置模型推理的输入数据,代码如下:

| examples/fourcastnet/train_precip.py | |

|---|---|

以上的代码中会根据设置的时间参数 DATE_STRINGS 读取对应的数据用于模型的输入,另外 get_vis_datas 函数内还读取了对应时刻的真值数据,这些数据也将可视化出来,方便与模型的预测结果进行对比。

由于模型对降水量进行了对数处理,因此需要将模型结果重新映射回线性空间,代码如下:

最后,构建可视化器的代码如下:

以上构建好的模型、评估器、可视化器将会传递给 ppsci.solver.Solver 用于在测试集上评估性能和进行可视化。

5. 完整代码¶

| examples/fourcastnet/train_pretrain.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 | |

| examples/fourcastnet/train_finetune.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 | |

| examples/fourcastnet/train_precip.py | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 | |

6. 结果展示¶

下图展示了风速模型按照6小时间隔的预测结果和真值结果。

下图展示了降水量模型按照6小时间隔的预测结果和真值结果。